Model Production Monitoring (MPM)

Comet's Model Production Monitoring (MPM) helps you maintain high quality ML models by monitoring and alerting on defective predictions from models deployed in production. MPM supports all model types and allows you to monitor all your models in one place. It integrates with Comet Experiment Management, allowing you to track model performance from training to production.

Comet MPM is an optional module that can be installed alongside EM, only on Kubernetes and KAIO deployments.

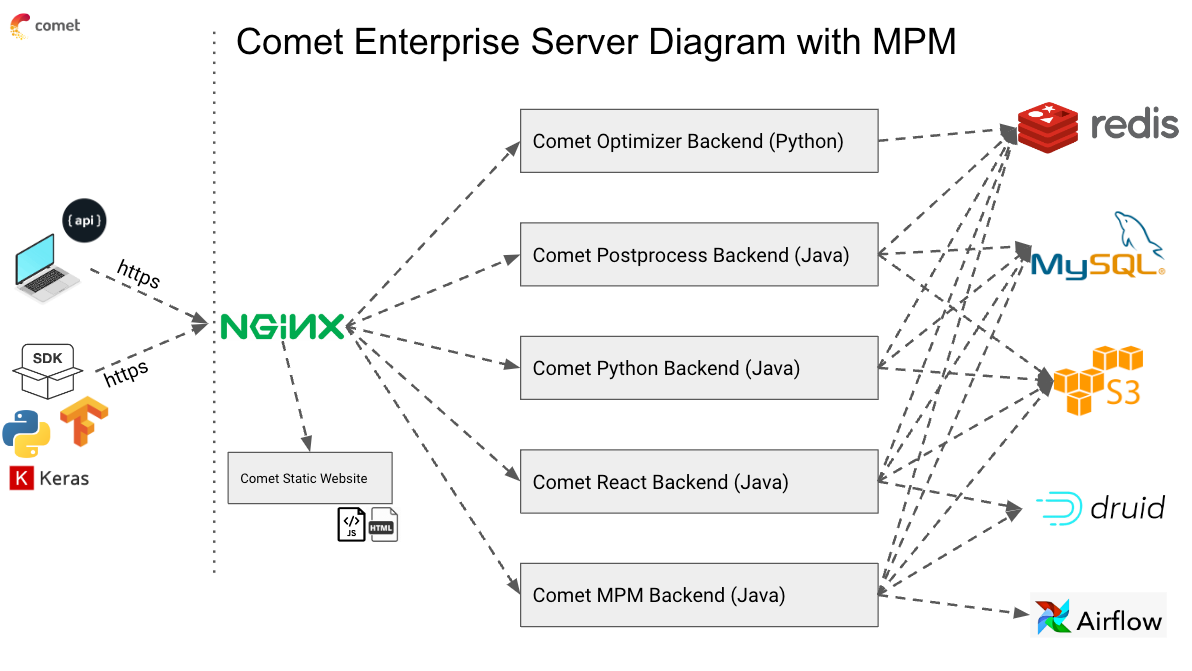

Architecture¶

The Model Production Monitoring (MPM) infrastructure is composed of two primary services:

Druid: Druid is used as our real-time DB for archiving and querying of monitoring data. The Druid components are deployed via a subchart within the Comet Helm chart. MPM uses Druid's Bloom Filter, which requires a separate Redis cache from the primary Redis cache that Comet uses. For this, the Helm chart deploys a separate Redis instance internal to Kubernetes. Due to the lack of support for Bloom Filter, this Druid Redis instance cannot be run on AWS ElastiCache.

Airflow: Airflow is used to orchestrate the ingestion and processing of events by Druid. The Airflow components are also deployed via a subchart within the Comet Helm chart.

Deployment¶

To proceed with applying the Helm chart, please refer first to our Helm chart documentation, which covers the necessary Helm commands. After that, follow the steps below to enable MPM (Model Production Monitoring).

To enable Comet MPM using a Helm chart, update your override values by setting the enabled field for the mpm section to true:

# ...

comet:

# ...

mpm:

enabled: true

# ...

MPM will however NOT work without configuring the additional Druid and Airflow stacks for it as outlined later.

Resource Requirements¶

Before enabling MPM, please be sure that you have prepared the required compute resources for you Kubernetes cluster to ensure a smooth deployment.

The MPM Application itself should be given its own compute reservations but, it is possible to deploy it into an existing Comet EM deployment Node Pool, without adding more nodes to the pool. This however is NOT true for the MPM Data Layers (Druid and Airflow) which Must be given significant additional compute resources to run properly.

For more details on these requirements, please refer to the MPM section of our Resource Requirements Page.

Druid and Airflow¶

To enable the deployment of the MPM Data Layer dependencies (Druid + Airflow) set the following in you override-values:

# ...

backend:

# ...

mpm:

# ...

druid:

enabled: true

# ...

# ...

As explained in in the Resource Requirements Page, we recommend assigning both Druid and Airflow to their own node pools.

The nodeSelector allows you to set a mapping of labels that must be set on any node on which Druid pods will be scheduled to run.

In the following example, the desired node pools have a label called: nodegroup_name, set to druid and airflow, respectively.

# ...

druid:

# ...

broker:

nodeSelector:

nodegroup_name: druid

# ...

coordinator:

nodeSelector:

nodegroup_name: druid

# ...

overlord:

nodeSelector:

nodegroup_name: druid

# ...

historical:

nodeSelector:

nodegroup_name: druid

# ...

middleManager:

nodeSelector:

nodegroup_name: druid

# ...

router:

nodeSelector:

nodegroup_name: druid

# ...

zookeeper:

nodeSelector:

nodegroup_name: druid

# ...

airflow:

defaultNodeSelector:

nodegroup_name: airflow

# ...

Additional Configuration¶

The values above are defaults from override-values.yaml and work as-is with the internal application infrastructure dependencies provisioned. They can be updated for use with external infrastructure dependencies via the following:

By default, the Helm Chart will configure MPM to use an automatically created archive bucket with the internal MinIO instance deployed by the chart as well.

When not using MinIO (as is recommended for production deployments) you will need to create a bucket for MPM to use and configure the name of that bucket.

# ...

backend:

# ...

mpm:

# ...

druid:

# ...

s3:

archive:

bucket: comet-druid-bucket

# ...

# ...

Druid also needs a user to communicate with the Comet MySQL server. You can enable the auto-creation of this user in the MySQL database, by toggling on the following setting:

# ...

backend:

# ...

mpm:

# ...

druid:

# ...

mysql:

createUser: true

# ...

This takes credentials from .Values.mysqlInternal.auth.rootPassword when using .Values.mysql.enableInternalMySQL, or .Values.mysql.username and .Values.mysql.password, to connect to the database and create the user When using external MySQL, the credentials specified must have the necessary permissions for creating a user.

Assigning Resources to the MPM Application Workload¶

To specify the node pool the nodeSelector allows you to set a mapping of labels that must be set on any node on which Druid will be scheduled to run.

# ...

backend:

# ...

mpm:

nodeSelector:

agentpool: comet-mpm

# tolerations: []

# affinity: {}

# ...

To aggresively set the resource reservations/requests or adjust the pod count, you can use the following settings:

WARNING: When setting aggressive resource reservations, you must either have spare nodes or much larger nodes if you wish to maintain availability when updating the pods. Otherwise you will not have enough capacity to run more than your configured pod count and will need to scale down and back up to replace pods.

# ...

backend:

# ...

mpm:

# replicaCount: 3

memoryRequest: 32Gi

# memoryLimit: 32Gi

cpuRequest: 16000m

# cpuLimit: 16000m

# ...